There was a time when Hollywood was the undisputed champion of entertainment, with its blockbuster releases and red-carpet glamor. But times are changing, and it appears that the big tech companies of Silicon Valley are gradually stealing the show—or should we say, the movies?

The technological titans of Silicon Valley have entered the entertainment game, and they are not playing around. With the advent of streaming services like Amazon Prime, Apple TV+, and Google Play Movies, the big tech companies are offering a plethora of movies and TV shows to audiences at the click of a button. They can make data-driven decisions, target niche audiences, and operate on a global scale. The versatility and the ability to adapt quickly give them an advantage over traditional Hollywood studios.

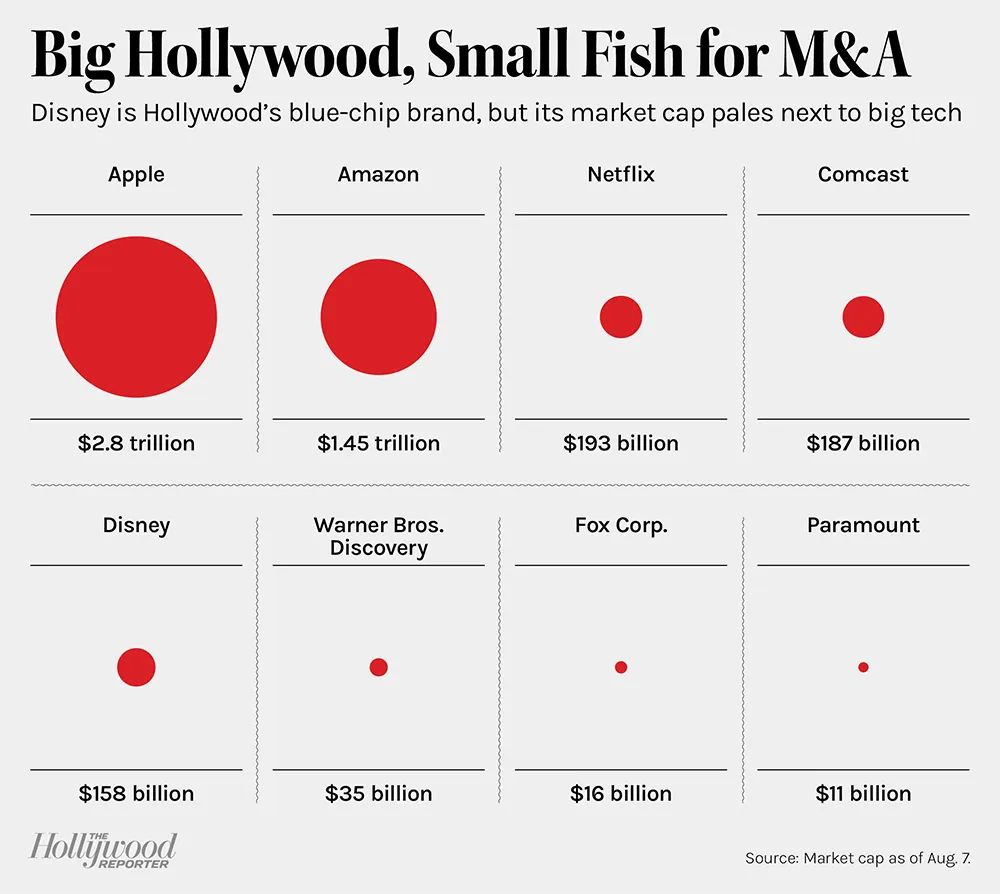

Let's talk numbers. Even the smallest of the big tech companies dwarfs the biggest Hollywood conglomerate. For instance, as of 2021, Apple Inc.'s market cap exceeded $2 trillion, while the largest Hollywood media conglomerates, like Disney, were valued at a few hundred billion dollars. This difference in scale allows tech companies to invest more, take greater risks, and capture market share more effectively.

If there is one domain where big tech hasn't yet completely taken over, it is the cinemas. Cinemas remain a unique space where audiences can experience films without the direct influence or intervention of tech companies. However, even this is changing as many people are choosing the convenience of streaming over the cinematic experience.

While streaming services have allowed Hollywood studios to reach a larger and more diverse audience, they are also walking into a trap. By focusing on streaming as a primary method of content distribution, studios are ceding more and more ground to tech companies who already control the digital infrastructure and the algorithms that recommend what to watch.

Here's another catch: Hollywood studios need to make money from their streaming offers to stay afloat, while big tech companies don’t necessarily have to. With diverse income streams, from cloud computing to advertising, big tech can afford to play the long game in the entertainment industry.

Hollywood should focus on capturing more value in areas where big tech companies either don't want to be or find it difficult to scale. This could be things like live events, theater shows, or even experience-based theme parks. Hollywood needs to go beyond the screen and offer something that tech companies can’t replicate easily.

To reclaim its position, Hollywood needs to go back to its roots—making more original, high-quality movies that compel audiences to visit theaters. The nostalgia, the popcorn, the community feeling of a packed theater laughing and crying together—these are experiences that technology can’t fully replicate. Hollywood should also consider capturing more value from in-theater consumables like popcorn and soda, or advertisements, to maintain profitability.

The tech giants of Silicon Valley have shifted the dynamics of the entertainment industry. However, all is not lost for Hollywood. By focusing on its strengths—original content, cinematic experiences, and areas difficult for tech companies to enter—Hollywood can still put up a good fight. After all, in the movies, it's always darkest before the dawn.

Image copyright © Blackmagic Design Pty. Ltd. 2019

Image copyright © Blackmagic Design Pty. Ltd. 2019